Part 1 outlined two notions of bullshit: Harry Frankfurt’s notion of bullshit as phoniness or indifference to truth, and Jerry Cohen’s notion of bullshit as unclarifiable clarity.

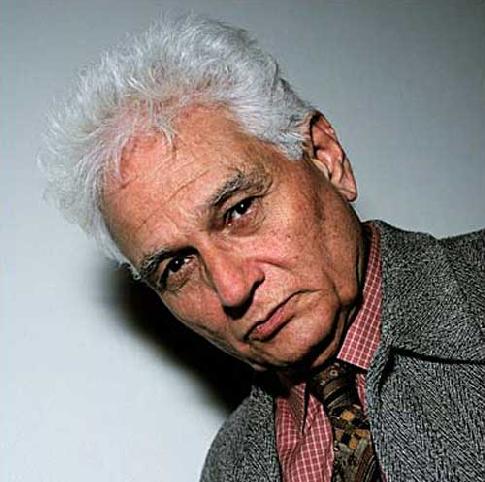

We saw too that Cohen claimed – very naughtily, without references – that there is a lot of bullshit in Derrida. Such sentiments are quite widespread.

I’m only going to look at one passage by Derrida which has been called bullshit by Brian Leiter, a prominent philosopher who is bitingly critical of Derrida on his excellent blog, Leiter Reports. Leiter has a deliciously acerbic approach to ‘frauds and intellectual voyeurs who dabble in a lot of stuff they plainly don’t understand’. Leiter is a Nietzsche expert who reserves special vitriol for Derrida’s ‘preposterously stupid writings on Nietzsche’, the way Derrida ‘misreads the texts, in careless and often intentionally flippant ways, inventing meanings, lifting passages out of context, misunderstanding philosophical arguments, and on and on’.

I’ll focus solely on Leiter’s 2003 blog entry, ‘Derrida and Bullshit’, which attacks the ‘ridiculousness’ of Derrida’s comments on 9/11. This came from an interview with Derrida in October 2001. Here is an abbreviated version; you can see the full thing on p. 85 onwards of this book.

… this act of naming: a date and nothing more. … [T]he index pointing toward this date, the bare act, the minimal deictic, the minimalist aim of this dating, also marks something else. Namely, the fact that we perhaps have no concept and no meaning available to us to name in any other way this ‘thing’ that has just happened … But this very thing … remains ineffable, like an intuition without concept, like a unicity with no generality on the horizon or with no horizon at all, out of range for a language that admits its powerlessness and so is reduced to pronouncing mechanically a date, repeating it endlessly, as a kind of ritual incantation, a conjuring poem, a journalistic litany or rhetorical refrain that admits to not knowing what it’s talking about.

9/11 turned the world upside down.

Or at least 45 degrees to the side.

So, is this bullshit, on the Frankfurt and/or the Cohen notions of bullshit? I would say no. I take Derrida to be saying the following.

We often repeat the name ‘9/11’ without thinking much about it. But the words we use can be very revealing. Why do we try to reduce this complex event to such a simple term? Because the event is so complex we cannot capture it properly. Precisely by talking about it in such a simple way, we admit that we don’t really understand it.

If I have understood Derrida – tell me if I haven’t – this explanation is surely wrong. I’d guess that in most cases we call such events by a name, usually a place or a thing. For example:

- Pearl Harbor, the Somme, Gallipoli, the Korean War

- the Great Fire of London, Hurricane Katrina

- Watergate, the execution of Charles I, the storming of the Bastille

- Chernobyl, Bhopal, Exxon Valdez

My guess is that we are most likely to use a date where we cannot restrict an event to a place or name:

- Arab Spring

- (May) 1968 riots

- the 1960s

- Black Tuesday, Black Wednesday

But my guess is that such names are rarer: places or things are usually more identifiable.

So, why was 9/11 called ‘9/11’, ‘September the 11th’? My guess is that it would usually have been called ‘the attack on the Twin Towers’ except for the fact that there were two other locations: an attack on the Pentagon, and a plane that crashed in Pennsylvania. I’m also guessing that ‘9/11’ had a ring to it because of the shop ‘7/11’. If the attack had happened in just one location on February 9th, we’d simply refer to the place.

I might be wrong. Other explanations will be gratefully received. But if I’m right, it suggests that Derrida’s explanation is a bit pompous, and probably wrong, but it is not Frankfurt-bullshit, because it is not attempting to deceive anyone, and it is not Cohen-bullshit, because it is not unclarifiably unclear.

There’s a deeper point here, about method. Philosophers and literary theorists often ask questions which are essentially empirical. Derrida’s question is empirical: what explains the name ‘9-11’? To answer empirical questions, it is best to use a scientific approach – for example, looking at more than just one possible explanation. In the fortnight that BlauBlog has been active, this is a point I’ve already made several hundred and fifty times.

Derrida, however, does not think like a social scientist. As a result, his explanation only seems plausible because he has not considered the alternatives.

In short, what Derrida said is crap, but not bullshit.

Of course, some readers will be more interested in my broader claims about the relationship between political theory and science. But note that I don’t equate the two: there are parallels, but also important differences. By contrast, I do argue elsewhere that some textual interpretation is essentially scientific: we often ask empirical questions (like what Locke meant by ‘rights’ or why he wrote what he wrote), and scientific ideas are the best tools we have yet developed for answering such questions. (See

Of course, some readers will be more interested in my broader claims about the relationship between political theory and science. But note that I don’t equate the two: there are parallels, but also important differences. By contrast, I do argue elsewhere that some textual interpretation is essentially scientific: we often ask empirical questions (like what Locke meant by ‘rights’ or why he wrote what he wrote), and scientific ideas are the best tools we have yet developed for answering such questions. (See

My chapter included a critique of Gadamer’s account of science, in his book Truth and Method and elsewhere.

My chapter included a critique of Gadamer’s account of science, in his book Truth and Method and elsewhere.